Gemini Models with Google Vertex AI Integration for Haystack

Multi-Modal components and function calling with the new Gemini integrations for Haystack

December 18, 2023In this article, we will introduce you to the new Google Vertex AI Integration for Haystack. While this integration introduces several new components to the Haystack eco-system (feel free to explore the full integration repo!), we’d like to start by showcasing two components in particular: the

VertexAIGeminiGenerator and the

VertexAIGeminiChatGenerator, using the gemini-pro and gemini-1.5-flash models.

💚 You can run the example code showcased in this article in the accompanying Notebook

The great news is, to authenticate for access to the Gemini models, you will only need to do a Google authentication in the Colab (instructions in the Colab)

VertexAIGeminiGenerator for Question Answering on Images

The new VertexAIGeminiGenerator component allows you to query Gemini models such as gemini-pro and gemini-1.5-flash. In this example, let’s use the latter, allowing us to also make use of images in our queries.

To get started, you will need to install Haystack and the google-vertex-haystack the integration:

!pip install haystack-ai google-vertex-haystack

Just like any other

generator component in Haystack 2.0-Beta, to run the GeminiGenerator on its own, we simply have to call the run() method. However, unlike our other components, the run method here expects parts as input. A Part in the Google Vertex AI API can be anything from a message, to images, or even function calls. Here are the docstrings from the source code for the most up-to-date reference we could find

here. Let’s run this component with a simple query 👇

from haystack_integrations.components.generators.google_vertex import VertexAIGeminiGenerator

gemini = VertexAIGeminiGenerator(model="gemini-1.5-flash", project_id='YOUR-GCP-PROJECT-ID')

gemini.run(parts = ["What is the most interesting thing you know?"])

Querying with Images

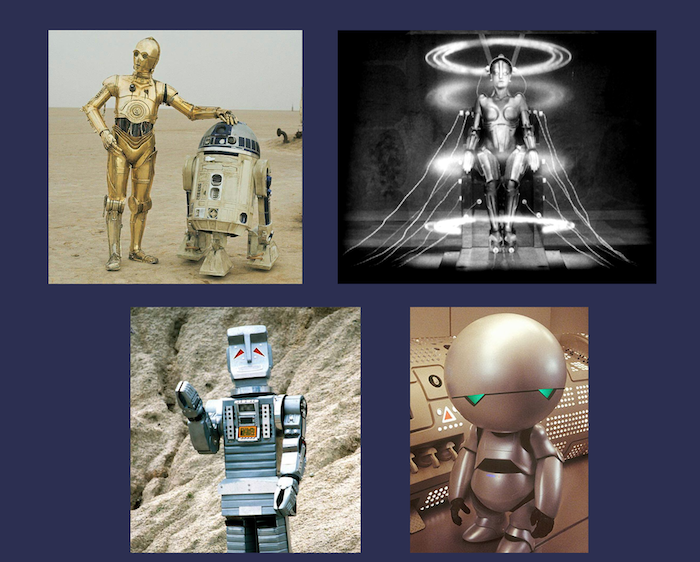

Next, let’s make use of the flexibility of parts and pass in some images alongside a question too. In the example below, we are providing 4 images containing robots, and asking gemini-1.5-flash what it can tell us about them.

import requests

from haystack.dataclasses.byte_stream import ByteStream

URLS = [

"https://raw.githubusercontent.com/silvanocerza/robots/main/robot1.jpg",

"https://raw.githubusercontent.com/silvanocerza/robots/main/robot2.jpg",

"https://raw.githubusercontent.com/silvanocerza/robots/main/robot3.jpg",

"https://raw.githubusercontent.com/silvanocerza/robots/main/robot4.jpg"

]

images = [

ByteStream(data=requests.get(url).content, mime_type="image/jpeg")

for url in URLS

]

result = gemini.run(parts = ["What can you tell me about this robots?", *images])

for answer in result["replies"]:

print(answer)

💡 Fun fact: We’ve notices that Gemini consistently misidentifies the 3rd robot! Often the response is: “The third image is of Gort from the 1951 film The Day the Earth Stood Still. Gort is a robot who is sent to Earth to warn humanity about the dangers of nuclear war. He is a powerful and intelligent robot, but he is also compassionate and understanding.”. However, this robot is Marvin the Paranoid Android from the The Hitchhiker’s Guide to the Galaxy series. It would have been pretty silly if Gort looked like that! 😅

VertexAIGeminiChatGenerator for Function Calling

With gemini-pro, we can also start introducing function calling! So let’s see how we can do that. An important feature to note here is that function calling in this context refers to using Gemini to identify how a function should be called. To see what we mean by this, let’s see if we can build a system that can run a get_current_weather function, based on a question asked in natural language.

For this section, we will be using the new VertexAIGeminiChatGenerator component, which can optionally be initialized by providing a list of tools. This will become handy in a moment because we will be able to define functions and provide them to the generator as a list of tools.

For demonstration purposes, we’re simply creating a get_current_weather function that returns an object which will always tell us it’s ‘Sunny, and 21.8 degrees’.. If it’s Celsius, that’s a good day! ☀️

from typing import Annotated

def get_current_weather(

location: Annotated[str, "The city for which to get the weather, e.g. 'San Francisco'"] = "Munich",

unit: Annotated[str, "The unit for the temperature, e.g. 'celsius'"] = "celsius",

):

return {"weather": "sunny", "temperature": 21.8, "unit": unit}

Next, we transform the function into a Haystack

Tool object.

The description of the parameteres (provided using Annotated) will be included in the schema of the tool.

from haystack.tools import create_tool_from_function

weather_tool = create_tool_from_function(get_current_weather)

We can use this tool with the VertexAIGeminiChatGenerator and ask it to tell us how the function should be called to answer the question “What is the temperature in celsius in Berlin?”:

from haystack_integrations.components.generators.google_vertex import VertexAIGeminiChatGenerator

from haystack.dataclasses import ChatMessage

gemini_chat = VertexAIGeminiChatGenerator(model="gemini-pro", project_id='YOUR-GCP-PROJECT-ID', tools=[weather_tool])

user_message = [ChatMessage.from_user("What is the temperature in celsius in Berlin?")]

replies = gemini_chat.run(messages=user_message)["replies"]

replies

With the response we get from this interaction, we can call the function get_current_weather using the

ToolInvoker component and proceed with our chat:

from haystack.components.tools import ToolInvoker

tool_invoker = ToolInvoker(tools=[weather_tool])

tool_messages = tool_invoker.run(messages=replies)["tool_messages"]

messages = user_message + replies + tool_messages

res = gemini_chat.run(messages = messages)

res["replies"][0].text

Building a Full Retrieval-Augmented Generative Pipeline

Alongside the individual use of the new Gemini components above, you can of course also use them in full

Haystack pipelines. Here is an example of a RAG pipeline that does question-answering on webpages using the

LinkContentFetcher and the VertexAIGeminiGenerator using the gemini-1.5-flash model 👇

As we are working on the full release of Haystack 2.0, components that are currently available in the Beta release are mostly focused on text. So, truly multi-modal applications as full Haystack pipelines is not yet possible. We are creating components that can easily handle other medias like images, audio, and video and will be back with examples soon!

from haystack_integrations.components.generators.google_vertex import VertexAIGeminiGenerator

from haystack.components.fetchers.link_content import LinkContentFetcher

from haystack.components.converters import HTMLToDocument

from haystack.components.preprocessors import DocumentSplitter

from haystack.components.rankers import TransformersSimilarityRanker

from haystack.components.builders.prompt_builder import PromptBuilder

from haystack import Pipeline

fetcher = LinkContentFetcher()

converter = HTMLToDocument()

document_splitter = DocumentSplitter(split_by="word", split_length=50)

similarity_ranker = TransformersSimilarityRanker(top_k=3)

gemini = VertexAIGeminiGenerator(model="gemini-1.5-flash", project_id=project_id)

prompt_template = """

According to these documents:

{% for doc in documents %}

{{ doc.content }}

{% endfor %}

Answer the given question: {{question}}

Answer:

"""

prompt_builder = PromptBuilder(template=prompt_template)

pipeline = Pipeline()

pipeline.add_component("fetcher", fetcher)

pipeline.add_component("converter", converter)

pipeline.add_component("splitter", document_splitter)

pipeline.add_component("ranker", similarity_ranker)

pipeline.add_component("prompt_builder", prompt_builder)

pipeline.add_component("gemini", gemini)

pipeline.connect("fetcher.streams", "converter.sources")

pipeline.connect("converter.documents", "splitter.documents")

pipeline.connect("splitter.documents", "ranker.documents")

pipeline.connect("ranker.documents", "prompt_builder.documents")

pipeline.connect("prompt_builder.prompt", "gemini")

Once we have the pipeline, we can run it with a query about Haystack 2.0-Beta:

question = "What do graphs have to do with Haystack?"

result = pipeline.run({"prompt_builder": {"question": question},

"ranker": {"query": question},

"fetcher": {"urls": ["https://haystack.deepset.ai/blog/introducing-haystack-2-beta-and-advent"]}})

for answer in result["gemini"]["replies"]:

print(answer)

Now you’ve seen some of what Gemini can do, as well as how to integrate it with Haystack 🫶 If you want to learn more:

- Check out the Haystack docs or tutorials

- Try out the Gemini quickstart colab from Google

- Participate in the Advent of Haystack