Haystack RAG Pipeline with Self-Deployed AI models using NVIDIA NIMs

Last Updated: April 17, 2025

In this notebook, we will build a Haystack Retrieval Augmented Generation (RAG) Pipeline using self-hosted AI models with NVIDIA Inference Microservices or NIMs.

The notebook is associated with a technical blog demonstrating the steps to deploy NVIDIA NIMs with Haystack into production.

The code examples expect the LLM Generator and Retrieval Embedding AI models already deployed using NIMs microservices following the procedure described in the technical blog.

You can also substitute the calls to NVIDIA NIMs with the same AI models hosted by NVIDIA on ai.nvidia.com.

# install the relevant libraries

!pip install haystack-ai

!pip install nvidia-haystack

!pip install --upgrade setuptools==67.0

!pip install pip install pydantic==1.9.0

!pip install pypdf

!pip install hayhooks

!pip install qdrant-haystack

For the Haystack RAG pipeline, we will use the Qdrant Vector Database and the self-hosted meta-llama3-8b-instruct for the LLM Generator and NV-Embed-QA for the embedder.

In the next cell, We will set the domain names and URLs of the self-deployed NVIDIA NIMs as well as the QdrantDocumentStore URL. Adjust these according to your setup.

# Global Variables

import os

# LLM NIM

llm_nim_model_name = "meta-llama3-8b-instruct"

llm_nim_base_url = "http://nims.example.com/llm"

# Embedding NIM

embedding_nim_model = "NV-Embed-QA"

embedding_nim_api_url = "http://nims.example.com/embedding"

# Qdrant Vector Database

qdrant_endpoint = "http://vectordb.example.com:30030"

1. Check Deployments

Let’s first check the Vector database and the self-deployed models with NVIDIA NIMs in our environment. Have a look at the technical blog for the steps NIM deployment.

We can check the AI models deployed with NIMs and the Qdrant database using simple curl commands.

1.1 Check the LLM Generator NIM

! curl '{llm_nim_base_url}/v1/models' -H 'Accept: application/json'

{"object":"list","data":[{"id":"meta-llama3-8b-instruct","object":"model","created":1716465695,"owned_by":"system","root":"meta-llama3-8b-instruct","parent":null,"permission":[{"id":"modelperm-6f996d60554743beab7b476f09356c6e","object":"model_permission","created":1716465695,"allow_create_engine":false,"allow_sampling":true,"allow_logprobs":true,"allow_search_indices":false,"allow_view":true,"allow_fine_tuning":false,"organization":"*","group":null,"is_blocking":false}]}]}

1.2 Check the Retreival Embedding NIM

! curl '{embedding_nim_api_url}/v1/models' -H 'Accept: application/json'

{"object":"list","data":[{"id":"NV-Embed-QA","created":0,"object":"model","owned_by":"organization-owner"}]}

1.3 Check the Qdrant Database

! curl '{qdrant_endpoint}' -H 'Accept: application/json'

{"title":"qdrant - vector search engine","version":"1.9.1","commit":"97c107f21b8dbd1cb7190ecc732ff38f7cdd248f"}

# A workaround

os.environ["NVIDIA_API_KEY"] = ""

2. Perform Indexing

Let’s first initialize the Qdrant vector database, create the Haystack indexing pipeline and upload pdf examples. We will use the self-deployed embedder AI model with NIM.

# Import the relevant libraries

from haystack import Pipeline

from haystack.components.converters import PyPDFToDocument

from haystack.components.writers import DocumentWriter

from haystack.components.preprocessors import DocumentCleaner, DocumentSplitter

from haystack_integrations.document_stores.qdrant import QdrantDocumentStore

from haystack_integrations.components.embedders.nvidia import NvidiaDocumentEmbedder

# initialize document store

document_store = QdrantDocumentStore(embedding_dim=1024, url=qdrant_endpoint)

# initialize NvidiaDocumentEmbedder with the self-hosted Embedding NIM URL

embedder = NvidiaDocumentEmbedder(

model=embedding_nim_model,

api_url=f"{embedding_nim_api_url}/v1"

)

converter = PyPDFToDocument()

cleaner = DocumentCleaner()

splitter = DocumentSplitter(split_by='word', split_length=100)

writer = DocumentWriter(document_store)

# Create the Indexing Pipeline

indexing = Pipeline()

indexing.add_component("converter", converter)

indexing.add_component("cleaner", cleaner)

indexing.add_component("splitter", splitter)

indexing.add_component("embedder", embedder)

indexing.add_component("writer", writer)

indexing.connect("converter", "cleaner")

indexing.connect("cleaner", "splitter")

indexing.connect("splitter", "embedder")

indexing.connect("embedder", "writer")

<haystack.core.pipeline.pipeline.Pipeline object at 0x7f267972b340>

🚅 Components

- converter: PyPDFToDocument

- cleaner: DocumentCleaner

- splitter: DocumentSplitter

- embedder: NvidiaDocumentEmbedder

- writer: DocumentWriter

🛤️ Connections

- converter.documents -> cleaner.documents (List[Document])

- cleaner.documents -> splitter.documents (List[Document])

- splitter.documents -> embedder.documents (List[Document])

- embedder.documents -> writer.documents (List[Document])

We will upload in the vector database a PDF research paper about ChipNeMo from NVIDIA, a domain specific LLM for Chip design. The paper is available here.

# Document sources to index for embeddings

document_sources = ["./data/ChipNeMo.pdf"]

# Create embeddings

indexing.run({"converter": {"sources": document_sources}})

Calculating embeddings: 100%|██████████| 4/4 [00:00<00:00, 15.85it/s]

200it [00:00, 1293.90it/s]

{'embedder': {'meta': {'usage': {'prompt_tokens': 0, 'total_tokens': 0}}},

'writer': {'documents_written': 108}}

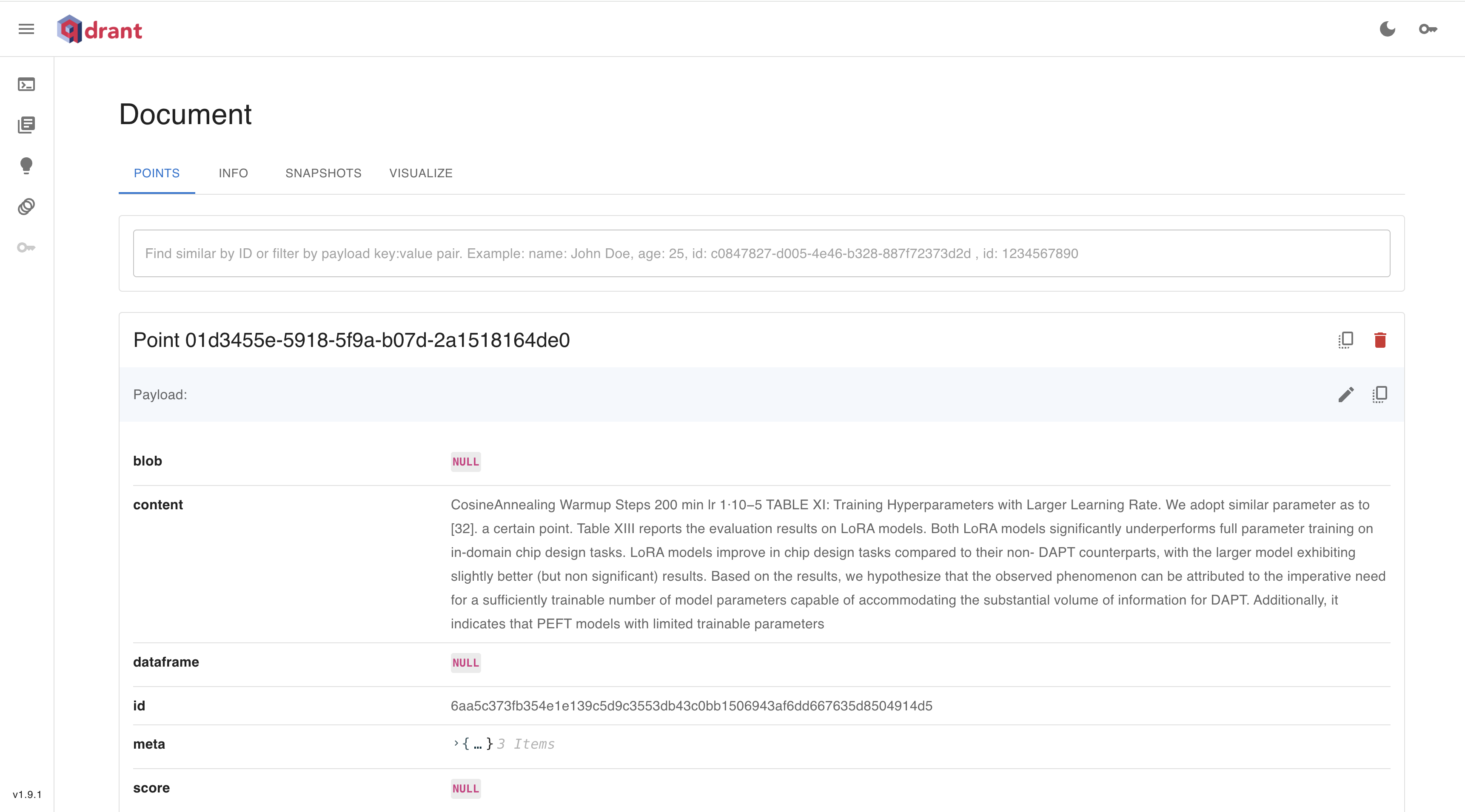

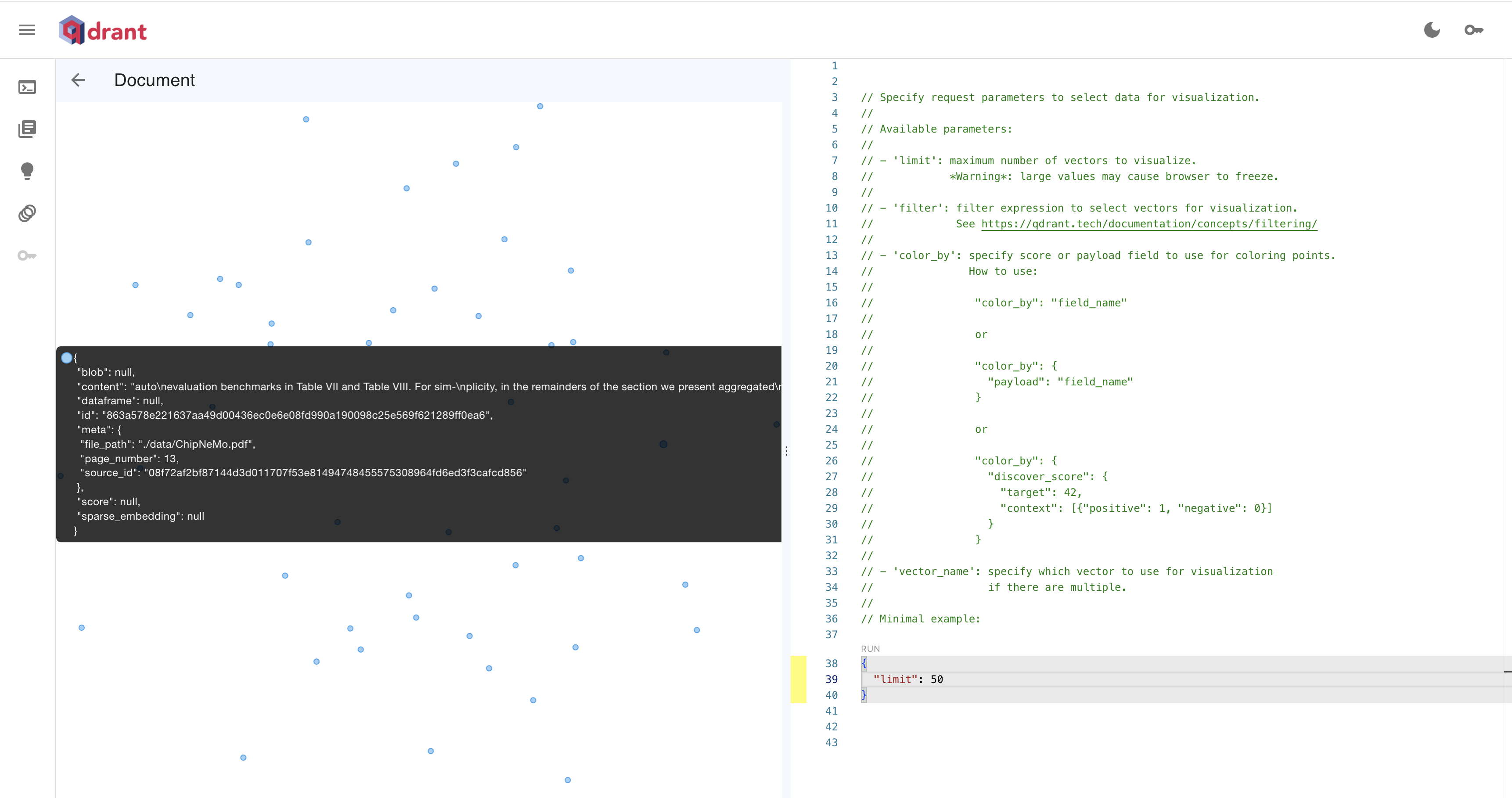

It is possible to check the Qdrant database deployments through the Web UI. We can check the embeddings stored on the dashboard available qdrant_endpoint/dashboard

3. Create the RAG Pipeline

Let’s now create the Haystack RAG pipeline. We will initialize the LLM generator with the self-deployed LLM with NIM.

# import the relevant libraries

from haystack import Pipeline

from haystack.utils.auth import Secret

from haystack.components.builders import PromptBuilder

from haystack_integrations.components.embedders.nvidia import NvidiaTextEmbedder

from haystack_integrations.components.generators.nvidia import NvidiaGenerator

from haystack_integrations.components.retrievers.qdrant import QdrantEmbeddingRetriever

# initialize NvidiaTextEmbedder with the self-hosted Embedding NIM URL

embedder = NvidiaTextEmbedder(

model=embedding_nim_model,

api_url=f"{embedding_nim_api_url}/v1"

)

# initialize NvidiaGenerator with the self-hosted LLM NIM URL

generator = NvidiaGenerator(

model=llm_nim_model_name,

api_url=f"{llm_nim_base_url}/v1",

model_arguments={

"temperature": 0.5,

"top_p": 0.7,

"max_tokens": 2048,

},

)

retriever = QdrantEmbeddingRetriever(document_store=document_store)

prompt = """Answer the question given the context.

Question: {{ query }}

Context:

{% for document in documents %}

{{ document.content }}

{% endfor %}

Answer:"""

prompt_builder = PromptBuilder(template=prompt)

# Create the RAG Pipeline

rag = Pipeline()

rag.add_component("embedder", embedder)

rag.add_component("retriever", retriever)

rag.add_component("prompt", prompt_builder)

rag.add_component("generator", generator)

rag.connect("embedder.embedding", "retriever.query_embedding")

rag.connect("retriever.documents", "prompt.documents")

rag.connect("prompt", "generator")

<haystack.core.pipeline.pipeline.Pipeline object at 0x7f267956e130>

🚅 Components

- embedder: NvidiaTextEmbedder

- retriever: QdrantEmbeddingRetriever

- prompt: PromptBuilder

- generator: NvidiaGenerator

🛤️ Connections

- embedder.embedding -> retriever.query_embedding (List[float])

- retriever.documents -> prompt.documents (List[Document])

- prompt.prompt -> generator.prompt (str)

Let’s now request the RAG pipeline asking a question about the ChipNemo model.

# Request the RAG pipeline

question = "Describe chipnemo in detail?"

result = rag.run(

{

"embedder": {"text": question},

"prompt": {"query": question},

}, include_outputs_from=["prompt"]

)

print(result["generator"]["replies"][0])

ChipNeMo is a domain-adapted large language model (LLM) designed for chip design, which aims to explore the applications of LLMs for industrial chip design. It is developed by reusing public training data from other language models, with the intention of preserving general knowledge and natural language capabilities during domain adaptation. The model is trained using a combination of natural language and code datasets, including Wikipedia data and GitHub data, and is evaluated on various benchmarks, including multiple-choice questions, code generation, and human evaluation. ChipNeMo implements multiple domain adaptation techniques, including pre-training, domain adaptation, and fine-tuning, to adapt the LLM to the chip design domain. The model is capable of understanding internal HW designs and explaining complex design topics, generating EDA scripts, and summarizing and analyzing bugs.

This notebook shows how to build a Haystack RAG pipeline using self-deployed generative AI models with NVIDIA Inference Microservices (NIMs).

Please check the documentation on how to deploy NVIDIA NIMs in your own environment.

For experimentation purpose, it is also possible to substitute the self-deployed models with NIMs hosted by NVIDIA at ai.nvidia.com.